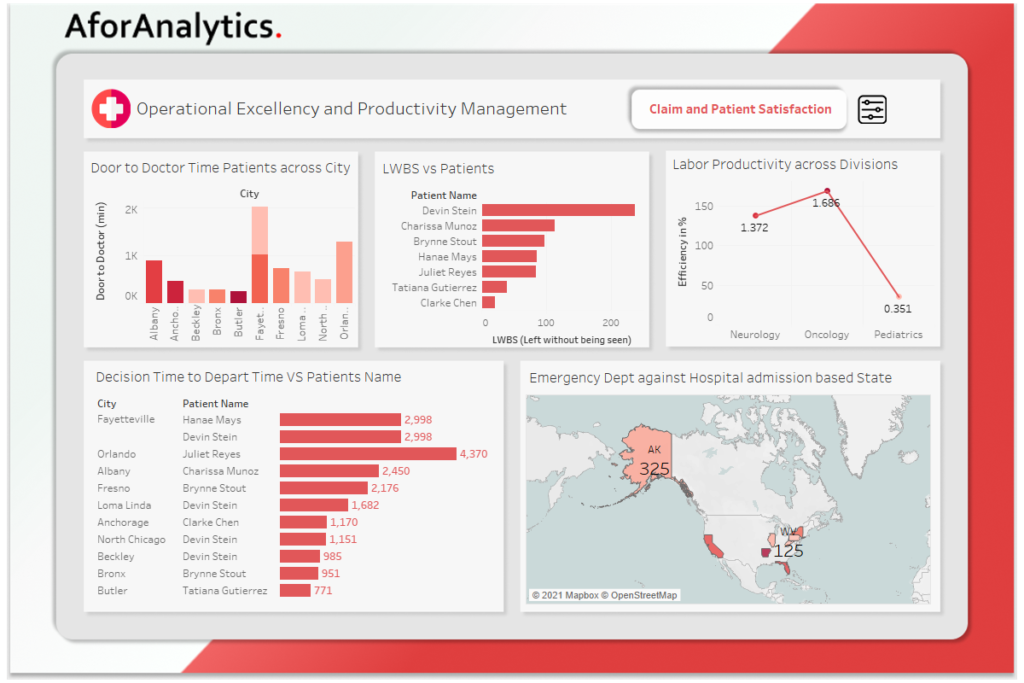

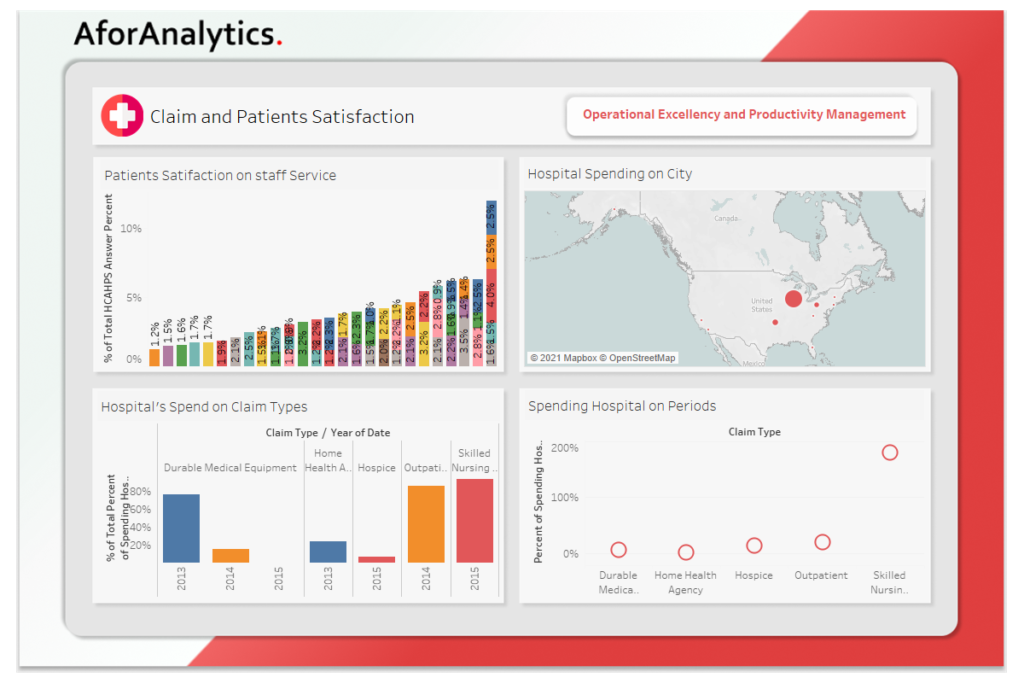

We A for Analytics handles several cases when comes to performance Management. Performance management has been everyone’s concerns when it comes to a shared self-service environment since nobody wants to be impacted by others.

This is especially true when each business unit decides their own publishing criteria where central IT team does not gate the publishing process.

How to protect the shared self-service environment? How to prevent one badly designed query from bringing all servers to their knees?

- First, set server parameters to enforce policy.

- Second, create daily alerts for any slow dashboards.

- Third, made performance metrics public to your internal community so everyone in the community has visibility of the worse performed dashboards to create some peer pressures with good intent.

- Fourth, hold site admin or business leads to be accounted for the self-service dashboard performance.

You will be in good shape if you do those four things above. Let me explain each of those in details.

1.Server policy enforcement

The server policy setting is for enforced policies. For anything that can be enforced, it is better to enforce those so everyone can have a piece of mind. The enforced parameters should be agreed upon business and IT, ideally in the governance council. The parameters can always be reviewed and revised when situation changes.

Examples of commonly enforced parameters like overall sizing allocation for a site, extracting time out, etc.

1.Exception alerts

There are only a few limited parameters that you are able to control as enforcement. All the rest will have to be governed by process. The alerts are most common approach to server as exception management:

- Performance alert: Create alerts when dashboard render time exceeds agreed threshold.

- Extract size alerts: Create alerts when extract size exceed define thresholds (Extract timeout can be enforced on server but not size).

- Extract failure alerts: Create alerts for failed extracts. Very often stakeholders will not know the extract failed. It is essential to let owners know his or her extracts failed so actions can be taken timely.

- You can create a lot of more alerts, like CPU usage, overall storage, memory, etc.

Who should receive the alerts? It depends. A lot of alerts are for server admin team only, like CPU usage, memory, storage, etc.

However most of the extracts and performance alerts are for the content owners. One best practice for content alert is always to include site admins or/and project owners as part of alerts.

Why? Workbook owners may change jobs so the original owner may not be responsible for the workbooks anymore. I was talking with a well known Silicon Valley company recently, they are telling me that a lot of workbook owner changed in last 2 years, they had hard time to figure out whom they should go after for issues related to workbooks. Site admin should be able to help to identify the new owners. If site admin is not close enough to workbook level in your implementation, you can choose project leaders instead of site admin.

What should be the threshold? There is no universal answer. But nobody wants to wait for more than 10 seconds.

The rule of thumb is that anything less than 5 seconds good. However anything more than 10 seconds is no good. I got a question when I present this in one local Tableau event. The question was what if one specific query used to take 30 minutes, and team made great progress to reduce it to 3 minutes. Do we allow this query to be published and run on server? The answer is depends. If the view is so critical for business, it will be of course worth of waiting 3 minutes for results to render. Everything has exception. However if the 3-minute query chokes everything else on the server and users may click the view to trigger the query often, you may want to re-think the architecture. Maybe the right answer will be to spin-off another server for this mission critical 3-minute application only so the rest of users will not impact.

Yellow and red warning: It is a good practice to create multi-level of warning like yellow and red warning with different threshold. Yellow alerts are warnings while red alerts are for actions.

You may say, hi Mark, this all sounds great but what if people do not take the actions.

This is exactly where some self-service deployments go wrong. There is where governance comes to play. In short, you need to have strong and agreed-upon process enforcement:

- Some organizations use charging back process to motivate good behaviors. The charge back will influence people’s behaviors but will not be able to enforce anything.

- The key process enforcement is a penalty system when red alert actions are not taken timely.

If owner did not take corrective actions during agreed period of time for red warning, a meeting should be arranged to discuss the situation. If the site admin refuses to take actions, the governance body has to make decision for agreed-upon penalty actions. The penalty can lead to site suspension.

Once a site is suspended, nobody can excess any of the contents anymore except server admins. The site owners have to work on the improvement actions and show the compliances before site can be re-activated. The good news is that all the contents are still there when a site is suspended and it takes less than 10 seconds for server admin to suspend or re-active a site.

I had this policy that was agreed with governance body. I communicate to as many self-service developers about this policy as I can. I never got push back about this policy. It is clear to me that self-service community likes to have a strong and clearly defined governance process to ensure everyone’s success. I suspended a site for some other reasons but never had to suspend a site due to performance alerts. What happens is that is my third tricky about worse performed dashboard visibility.

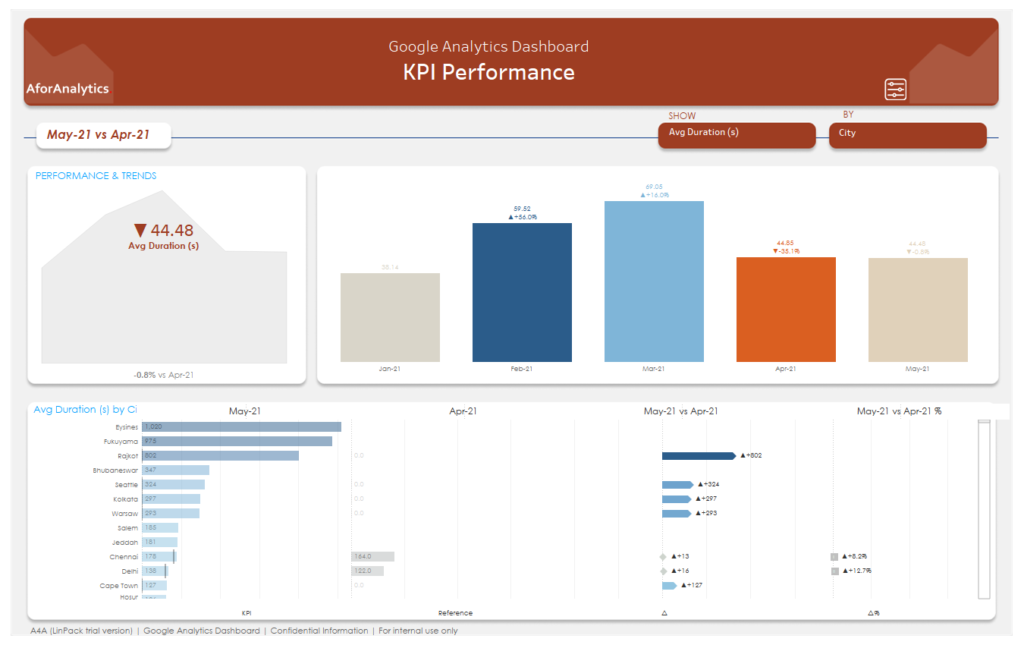

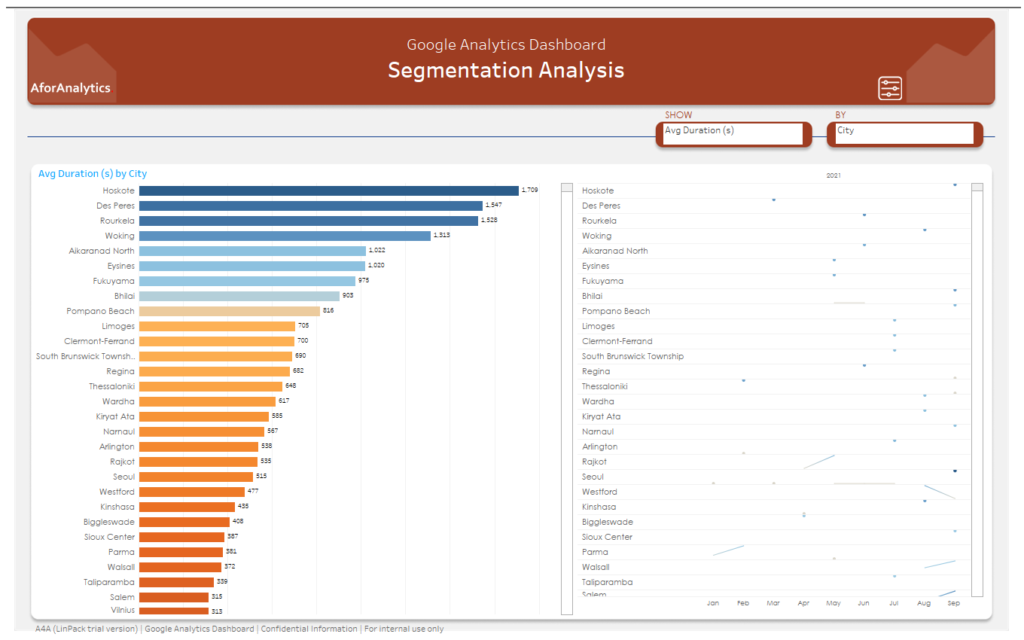

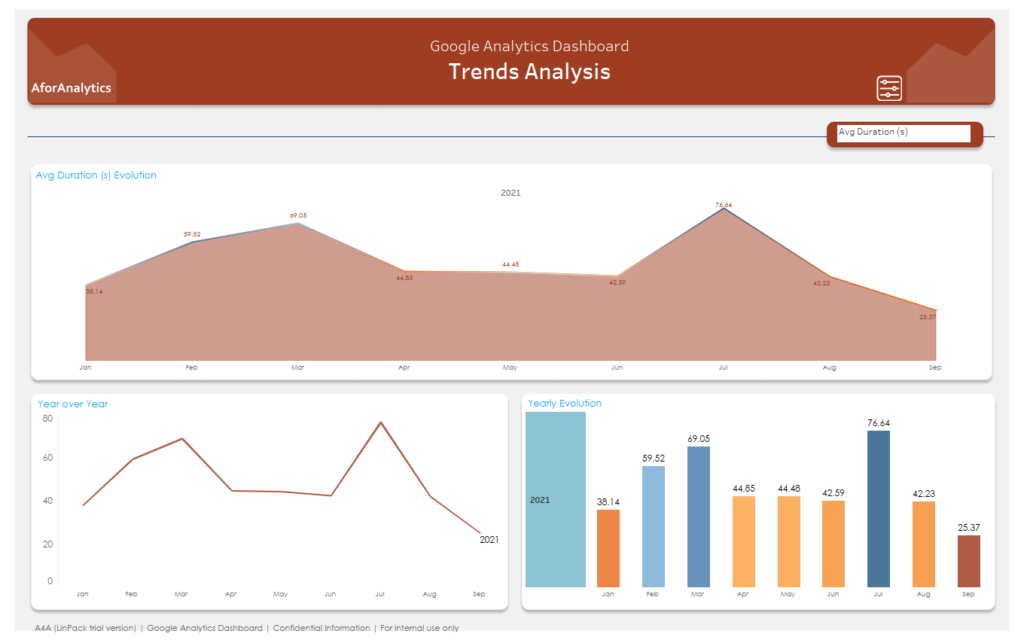

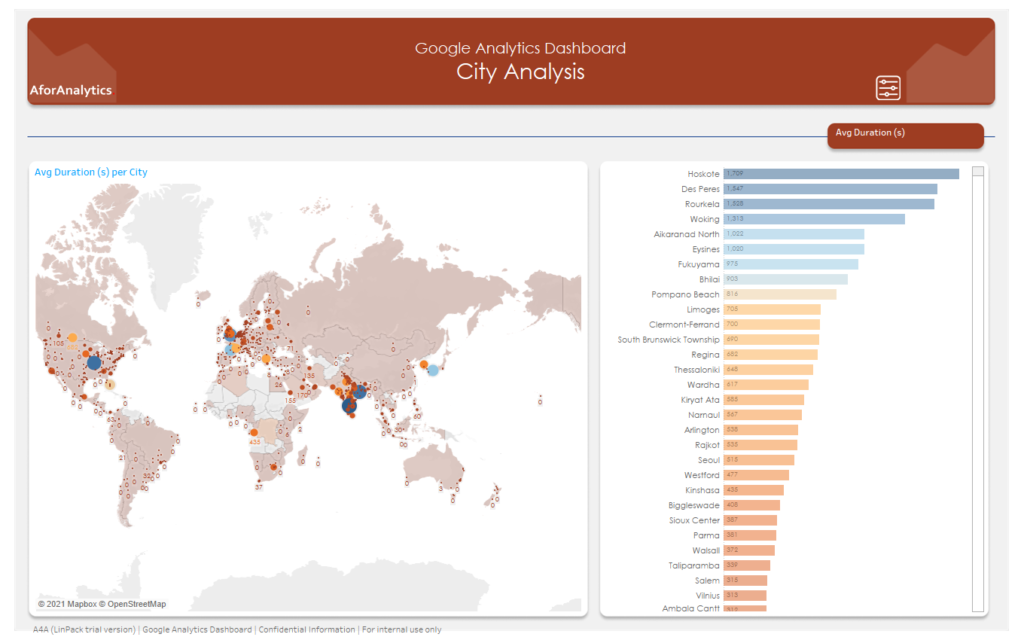

Make performance metric public

- It takes some efforts to make your server dashboard performance metric public to all your internal community. But it turns out that it is one of the best things that a server team can do. It has a few benefits:

- It serves as a benchmarking for community to understand what is good and good enough since the metric shows your site overall performance comparing with others on the server

- It shows all the long render dashboards to provide peer pressures.

- It shows patterns that help people to focus the problematic areas

- It creates great opportunity for community to help each other. This is one most important success factor. What turns out is that the problematic areas are often the new team on-boarded to the server. It community always have so many ideas to make dashboard perform a lot of better. This is why we never had to suspend any sites since when it comes with a lot of red alerts that community is aware of, it is the whole community that makes things happen, which is awesome.

Hold site admin accounted for

I used to manage Hewlett Packard’s product assembly line during my early career. Hewlett Packard has some well-known quality control processes. One thing that I learned was that each assembler is response for his or her own quality. Although there is QA at the end of line but each workstation has a checklist before pass to next station. This simple philosophy applies today’s software development and self-service analytics environment. The site admin is responsible for the performance of workbooks in the sites. The site admin can further hold workbook owners accounted for the shared workbooks. Flexibility comes with accountability too.

I believe theory Y (people have good intent and want to perform better) and I have been practicing theory Y for years. The whole intent of server dashboard performance management is to provide performance visibility to community and content owners so owners know where the issues are so they can take actions.

What I see often is that a well-performed dashboard may become bad over-time due to data changes and many other factors. The alerts will catch all of those exceptions no matter your dashboards are released yesterday, last week, last month or last year – this approach is a lot of better than gating releases process which is a common IT practice.

During a recent Run-IT as business meet-up, audiences were skeptical when I said that IT did not gate any workbook publishing process and it is completely a self-service. Then audiences started to realize that it did make sense when I started to talk about performance alerts that will catch it all together.

What business likes most about this approach is the freedom to push some urgent workbooks to server even workbooks are not performing great – they can always come back later on to tune them and make them perform better for both better use experiences and being good citizen